The aim of this research project was to solve a problem that user’s of typical VR systems such as the Oculus Rift and HTC Vive, encounter frequently; being unable to notice a change in a VR user’s real world environment while they are immersed in the virtual one. There are countless examples of children scaring their parents, people hitting their family or friends, and unsuspecting pets tripping their owners because the user could not tell where these dynamic objects were while in VR.

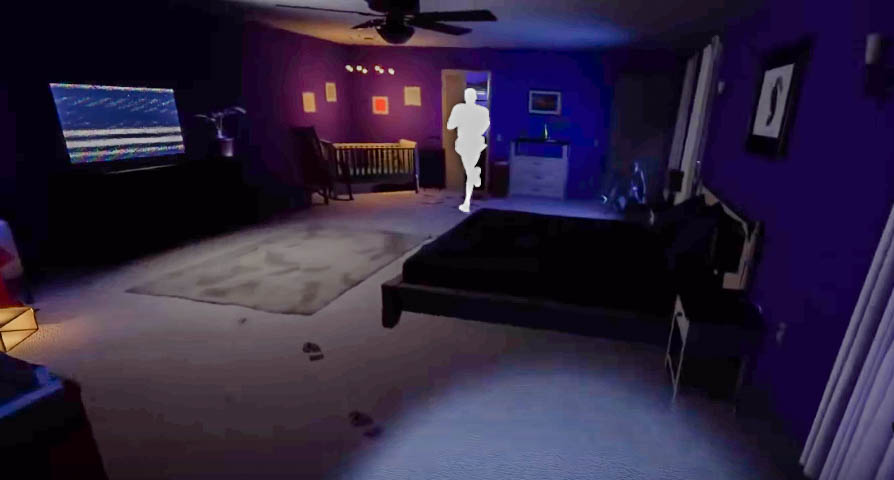

With this in mind, the study investigates how different sensory modalities (visual, auditory, and their combination) affect noticeability and comprehension of notifications designed to alert VR users when a person enters his/her area of use. In addition, we were curious how the use of an orientation type notification aids in perception of alerts that manifest outside a virtual reality users’ visual field. Results of a survey indicated that participants perceived the auditory modality as more effective regardless of notification type. An experiment corroborated these findings for the person notifications; however, the visual modality was in practice more effective for orientation notifications. Example of prototype visual notifications are below.